Last week you may have read about a website called prosecraft.io, a site with an index of some 25,000 books that provided a variety of data about the texts (how long, how many adverbs, how much passive voice) along with a chart showing sentiment analysis of the works in its collection and displayed short snippets from the texts themselves, two paragraphs representing the most and least vivid from the text. Overall, it was a somewhat interesting tool, promoted to authors to better understand how their work compares to those of other published works.

The news cycle about prosecraft.io was about the campaign to get its creator Benji Smith to take the site down (he now has) based on allegations of copyright infringement. A Gizmodo story about it generated lots of attention, and it’s been written up extensively, for example here, here, here, and here.

It’s written about enough that I won’t repeat the whole saga here. However, I think a few observations are worth sharing:

1) Don’t get your legal advice from Twitter (or whatever its called)

“Fair Use does not, by any stretch of the imagination, allow you to use an author’s entire copyrighted work without permission as a part of a data training program that feeds into your own ‘AI algorithm.’” – Linda Codega, Gizmodo (a sentiment that was retweeted extensively)

Fair use actually allows quite a few situations where you can copy an entire work, including situations when you can use it as part of a data training program (and calling an algorithm “AI” doesn’t magically transform it into something unlawful). For example, way back in 2002 in Kelly v. Ariba Soft, the 9th Circuit concluded that it was fair use to make full text copies of images found on the internet for the purpose of enabling web image search. Similarly, in AV ex rel Vanderhye v. iParadigms, the 4th Circuit in 2009 concluded that it was fair use to make full text copies of academic papers for use in a plagiarism detection tool.

Most relevant to prosecraft, in Authors Guild v. HathiTrust (2014) and Authors Guild v. Google (2015) the Second Circuit held that Google’s copying of millions of books for purposes of creating a massive search engine of their contents was fair use . Google produced full-text searchable databases of the works, and displayed short snippets containing whatever term the user had searched for (quite similar to prosecraft’s outputs). That functionality also enabled a wide range of computer-aided textual analysis, as the court explained:

The search engine also makes possible new forms of research, known as “text mining” and “data mining.” Google’s “ngrams” research tool draws on the Google Library Project corpus to furnish statistical information to Internet users about the frequency of word and phrase usage over centuries. This tool permits users to discern fluctuations of interest in a particular subject over time and space by showing increases and decreases in the frequency of reference and usage in different periods and different linguistic regions. It also allows researchers to comb over the tens of millions of books Google has scanned in order to examine “word frequencies, syntactic patterns, and thematic markers” and to derive information on how nomenclature, linguistic usage, and literary style have changed over time. Authors Guild, Inc., 954 F.Supp.2d at 287. The district court gave as an example “track[ing] the frequency of references to the United States as a single entity (‘the United States is’) versus references to the United States in the plural (‘the United States are’) and how that usage has changed over time.”

While there are a number of generative AI cases pending (a nice summary of them is here) that I agree raise some additional legal questions beyond those directly answered in Google Books, the kind of textual analysis that prosecraft.io offered seems remarkably similar to the kinds of things that the courts have already said are permissible fair uses.

2) Text and data mining analysis has broad benefits

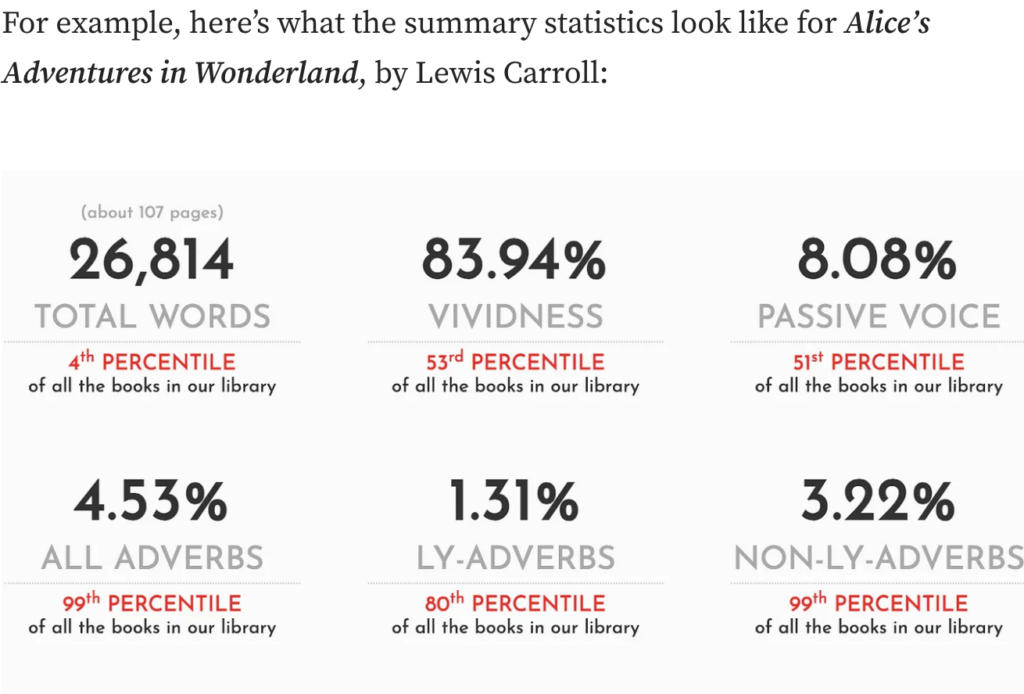

Not only is text mining fair use, it also yields some amazing insights that truly “promote the progress of Science,” which is what copyright law is all about. Prosecraft offered some pretty basic insights into published books – how long, how many adverbs, and the like. I can understand opinions being split on whether that kind of information is actually helpful for current or aspiring authors. But, text mining can reveal so much more.

In the submission Authors Alliance made to the US Copyright Office three years ago in support of a Section 1201 Exemption permitting text data mining, we explained:

TDM makes it possible to sift through substantial amounts of information to draw groundbreaking conclusions. This is true across disciplines. In medical science, TDM has been used to perform an overview of a mass of coronavirus literature.Researchers have also begun to explore the technique’s promise for extracting clinically actionable information from biomedical publications and clinical notes. Others have assessed its promise for drawing insights from the masses of medical images and associated reports that hospitals accumulate.

In social science, studies have used TDM to analyze job advertisements to identify direct discrimination during the hiring process.7 It has also been used to study police officer body-worn camera footage, uncovering that police officers speak less respectfully to Black than to white community members even under similar circumstances.

TDM also shows great promise for drawing insights from literary works and motion pictures. Regarding literature, some 221,597 fiction books were printed in English in 2015 alone, more than a single scholar could read in a lifetime. TDM allows researchers to “‘scale up’ more familiar humanistic approaches and investigate questions of how literary genres evolve, how literary style circulates within and across linguistic contexts, and how patterns of racial discourse in society at large filter down into literary expression.” TDM has been used to “observe trends such as the marked decline in fiction written from a first-person point of view that took place from the mid-late 1700s to the early-mid 1800s, the weakening of gender stereotypes, and the staying power of literary standards over time.” Those who apply TDM to motion pictures view the technique as every bit as promising for their field. Researchers believe the technique will provide insight into the politics of representation in the Network era of American television, into what elements make a movie a Hollywood blockbuster, and into whether it is possible to identify the components that make up a director’s unique visual style [citing numerous letters in support of the TDM exemption from researchers].

3) Text and data mining is not new and it’s not a threat to authors

Text mining of the sort it seemed prosecraft employed isn’t some kind of new phenomenon. Marti Hearst, a professor at UC Berkeley’s iSchool explained the basics in this classic 2003 piece. Scores of computer science students experiment with projects to do almost exactly what prosecraft was producing in their courses each year. Textbooks like Matt Jockers’s Text Analysis with R for Students of Literature have been widely used and adopted all across the U.S. to teach these techniques. Our submissions during our petition for the DMCA exemption for text and data mining back in 2020 included 14 separate letters of support from authors and researchers engaged in text data mining research, and even more researchers are currently working on TDM projects. While fears over generative AI may be justified for some creators (and we are certainly not oblivious to the threat of various forms of economic displacement), it’s important to remember that text data mining on textual works is not the same as generative AI. On the contrary, it is a fair use that enriches and deepens our understanding of literature rather than harming the authors who create it.

Discover more from Authors Alliance

Subscribe to get the latest posts sent to your email.